3D modelling is extremely useful and can be used for many things. In software such as Maya, you get given a massive amount of tools to use. You can make very detailed models, view the model from any angle, and make very small adjustments. In the first part of this assignment I will talk about different things that 3D modelling is used for.

Product Design

When a company is designing a product, they will want a realistic view of what their product could look like beforehand, so they can make changes to it before creating the final product. This is where 3D modelling comes in. First, basic sketches of the product could be made, then more detailed sketches. Eventually, the sketches can be put into a 3D modelling software such as Maya, and a 3D model can be made from the sketches. When they have a 3D model of their product, final tweaks and changes can be made until they get the design they want. In a way, 3D modelling is like creating a prototype, but instead of a physical prototype, it's on the PC. This is generally easier than creating something physical, because it saves resources and is easy to store since it's digital.

Below you can see an example. A controller is being modelled in the 3D software Maya, and 2 drawings are being used to help create the model. A side image, and a top image.

Architecture

3D modelling for architecture is similar to product design. It's used like a virtual prototype. When building something big, you don't want to mess up, so by creating a 3D model you can get a detailed view of what the finished building will look like, and check if there are any errors in the layout. Below you can see an example of a building that has been created in a 3D modelling program.

Realistic models of buildings could also be imported into an engine such as the Unreal Engine. By doing this, you can set up first person camera views and actually walk around the house.

Games

Most modern games use full 3D graphics, so 3D modelling is a huge part of the gaming industry. Every 3D object used in a game would have been made using a 3D modelling software. Maya is a good example of a 3D modelling program used for games, and is the most used.

Below is a 3D model from Gears of War 3. On the left and right you can see all the polygons on the model. Polygons are what make up a model, the more polygons, the more detailed and realistic looking the model will be. A model with a low poly count will look more jagged.

Below you can see a model with a lower poly count. As you can see, it doesn't look as smooth as the Gears of War model.

Geometric Theory

When using 3D modelling software such as Maya, there are several basic things you need to understand.

Polygons - In 3D modelling, shapes are made up of polygons. Polygons are 2D shapes that make up the 3D shape. They are essentially the faces. As I've spoke about before, the more polygons, the smoother the model will appear. Higher polygon models also take up more space and are harder to handle, so a mobile game would need models with a much lower polygon count than a game designed to run on high spec PC's.

Primitives - 3D shapes in 3D modelling are known as "primitives." There are a few different types, polygon primitives (3D shapes made up of polygons,) volume primitives and NURBS primitives.

Vertices, Edge and Face - These are different parts of a shape. The vertices/vertex is the name given to each angular point on a shape. The edges are the lines where two faces meet, and the faces are the flat surfaces on the shape. In software such as Maya, these different features of the shape all have their own modes that can be turned on and off. When using one of the modes, that certain part of the shape can be selected and modified individually.

Meshes - Shapes that are made up of edges, faces and vertices are known as "meshes" in 3D modelling.

Wireframe - A wireframe model is a model that has no shading, and all that is shown is the edges of the polygons/faces.

Mesh Construction

There are different ways that meshes can be constructed in 3D modelling software. I will talk about these below.

Box modelling - Box modelling is when simple primitives such as boxes are used to create a very basic version of a final idea for a model. The basic shape is then gradually sculpted into the detailed final model.

Extrusion modelling - Extrusion modelling is when you use images to help create a model. You start with one image and use it to create a 2D outline, then you add a second image at a different angle and extrude the 2D model into 3D. This method of modelling is often used when creating faces, heads and helmets. Sometimes only half of the model is created, then it is duplicated and put together to make sure that the model is symmetrical.

Using common primitives - Similar to what I spoke about in the box modelling section, the basic primitive shapes such as cubes, pyramids, cylinders and spheres are quite important and are used a lot. Because of the amount of tools and features 3D modelling programs offer, basic primitives can be modified and turned into pretty much whatever the user wants. An example of this is the use of a cube in one of the Maya beginner guides. The task is to create a helmet, but instead of starting with a sphere and modifying that, it is actually easier to start with a cube and smooth it, to make it more spherical.

Displaying 3D Polygon Animations

Application Programming Interface

When using 3D modelling software such as Maya, there are several basic things you need to understand.

Polygons - In 3D modelling, shapes are made up of polygons. Polygons are 2D shapes that make up the 3D shape. They are essentially the faces. As I've spoke about before, the more polygons, the smoother the model will appear. Higher polygon models also take up more space and are harder to handle, so a mobile game would need models with a much lower polygon count than a game designed to run on high spec PC's.

Primitives - 3D shapes in 3D modelling are known as "primitives." There are a few different types, polygon primitives (3D shapes made up of polygons,) volume primitives and NURBS primitives.

Vertices, Edge and Face - These are different parts of a shape. The vertices/vertex is the name given to each angular point on a shape. The edges are the lines where two faces meet, and the faces are the flat surfaces on the shape. In software such as Maya, these different features of the shape all have their own modes that can be turned on and off. When using one of the modes, that certain part of the shape can be selected and modified individually.

Meshes - Shapes that are made up of edges, faces and vertices are known as "meshes" in 3D modelling.

|

| Mesh of a turtle. |

Wireframe - A wireframe model is a model that has no shading, and all that is shown is the edges of the polygons/faces.

|

| Wireframe model. |

Mesh Construction

There are different ways that meshes can be constructed in 3D modelling software. I will talk about these below.

Box modelling - Box modelling is when simple primitives such as boxes are used to create a very basic version of a final idea for a model. The basic shape is then gradually sculpted into the detailed final model.

Extrusion modelling - Extrusion modelling is when you use images to help create a model. You start with one image and use it to create a 2D outline, then you add a second image at a different angle and extrude the 2D model into 3D. This method of modelling is often used when creating faces, heads and helmets. Sometimes only half of the model is created, then it is duplicated and put together to make sure that the model is symmetrical.

Using common primitives - Similar to what I spoke about in the box modelling section, the basic primitive shapes such as cubes, pyramids, cylinders and spheres are quite important and are used a lot. Because of the amount of tools and features 3D modelling programs offer, basic primitives can be modified and turned into pretty much whatever the user wants. An example of this is the use of a cube in one of the Maya beginner guides. The task is to create a helmet, but instead of starting with a sphere and modifying that, it is actually easier to start with a cube and smooth it, to make it more spherical.

Displaying 3D Polygon Animations

Application Programming Interface

API stands for application programming interface and is something that works together with the GPU (graphics processing unit or graphics card) to render 3D objects. An example of a well known API is OpenGL. OpenGL is defined as a set of functions which can be called by the client program. Alongside this, a set of named integer constants are used. An example of a named integer constant is GL_TEXTURE_2D, which matches with the decimal number 3553. The functions that OpenGL uses seem similar to ones used in programming languages such as C, however OpenGL's functions are language-independent. Below you can see an image of the OpenGL pipeline. Another example of an API is Microsofts Direct3D.

Info from: https://en.wikipedia.org/wiki/OpenGL

Graphics Pipeline

The graphics pipeline is the sequence of steps that are used to turn a 3D model from a game or 3D animation into the final output that the computer displays. Above I briefly mentioned Direct3D, which is Microsofts API. In this section I will talk about the graphics pipeline that the API uses.

Input-Assembler Stage - Supplies data to the pipeline (triangles, lines and points)

Vertex-Shader Stage - Processes vertices, performing operations such as transformations, skinning and lighting.

Geometry-Shader Stage - Processes entire primitives.

Stream-Output Stage - Streams primitive data to memory on the way to the rasterizer.

Memory can be recirculated back into the pipeline as data.

Rasterizer Stage - Clips primitives and prepares primitives to be pixel shaded.

Pixel-Shader Stage - Generates per-pixel data such as colour from interpolated primitive data.

Output-Merger Stage - Generates the final pipeline result by combining output data such as pixel shader values, depth and stencil information.

There is also a small set of stages known as the tessellation stages. These stages convert high-order surfaces to triangles so they can be rendered within the Direct3D pipeline. The tessellation stages are made up from hull-shader, tesselator, and domain-shader stages.

Info from: https://msdn.microsoft.com/en-us/library/windows/desktop/ff476882%28v=vs.85%29.aspx?f=255&MSPPError=-2147217396

Rendering Techniques

To save time, there are a few different techniques for rendering/transporting light:

Rasterization - Rasterization is when you take a raster graphics format image, and convert it into a raster image of pixels and dots, so the image can be output on a video display, printer, or stored in a bitmap file. Both models and 2D rendering primitives can be rasterized. Rasterization geometrically projects objects in a scene onto an image plane, and doesn't utilize any advanced optical effects.

Ray casting - Ray casting observes the scene from a specific point of view, and it calculates the image based on geometry and simple reflections. Monte Carlo techniques may also be used.

Ray tracing - Similar to casting, but uses more advanced optical simulation and usually uses Monte Carlo techniques.

Monte Carlo Techniques - Monte Carlo techniques are computational algorithms that obtain numerical results by using random repeated sampling. Monte Carlo is often used in physical and mathematical problems, usually when it is difficult or impossible to use other methods.

Info from: https://en.wikipedia.org/wiki/Rendering_(computer_graphics)#Techniques

Rendering Engines

V-Ray

V-Ray is a rendering plug-in used in 3D graphics software. It is used for film and video game production, product and industrial design, and architecture. V-Ray uses path tracing and photon mapping, which are both global illumination algorithms. V-Ray is quite popular for creating environments and is the go-to engine for lots of people.

Arnold

Arnold uses Monte Carlo Ray Tracing, and because of the way it is optimized it is able to send billions of spatially incoherent rays through a scene. It uses one level of diffuse inter-reflection to allow light to bounce of a wall or object and indirectly illuminate a subject. Arnold is mainly used by big studios for films, and has been used in Pacific Rim, Gravity, Cloudy with a Chance of Meatballs and more.

RenderMan

RenderMan is the rendering engine that Pixar use for rendering all of their in-house productions. The engine was originally only used by Pixar and licensed to third parties, however a free, non commercial version has been released. RenderMan uses the RenderMan Interface Specification to define cameras, geometry, materials and lights. The engine also supports Open Shading Language. The engine used to support the Reyes algorithm, with supported ray tracing and global illumination. However, support for this algorithm was removed in 2016. It now uses Monte Carlo path tracing.

Parallel Rendering

Parallel programming or computing is a way of efficiently solving computer problems/calculations, by solving them all at the same time and breaking them down into smaller parallel tasks. This can be applied to computer graphics to get parallel rendering. This is useful because rendering can be very intensive and require lots of resources. There are some parts of rendering that are embarrassingly parallel, which means that the calculations take little to no effort at all to break down, some examples are pixels, objects and frames. A GPU (graphics processing unit/graphics card) can handle embarrassingly parallel problems such as 3D rendering.

Info from: https://en.wikipedia.org/wiki/Parallel_rendering

|

| IBM's Supercomputer |

3D modelling software

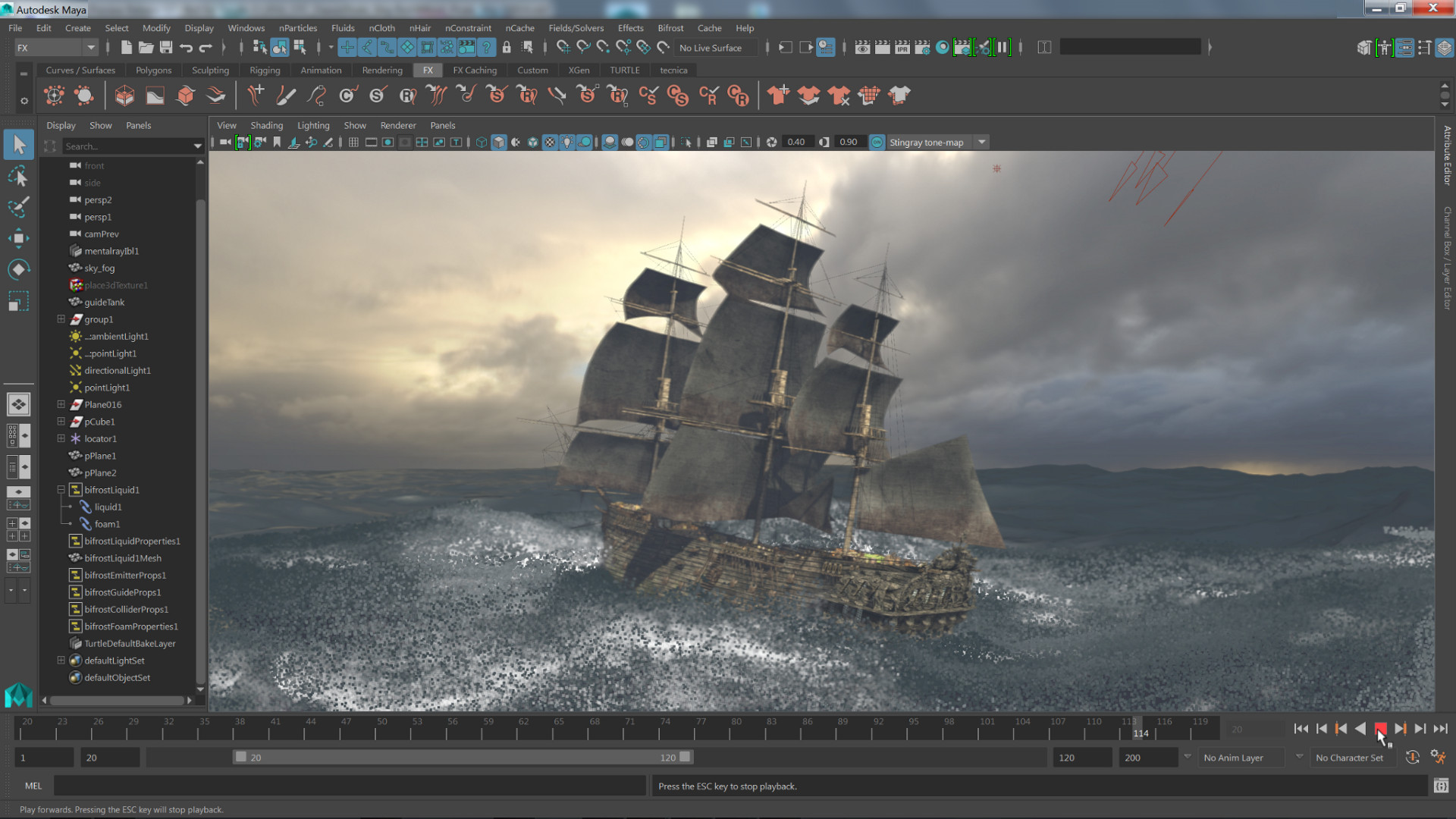

Autodesk Maya - Maya is a popular 3D modelling software used to create models, assets and animations used for video games, films and architecture. Maya was originally developed by Alias Systems Corportation in 1998 for the IRIX OS, however at a later date Maya was acquired by Autodesk and support for this operating system was removed. Maya features things such as fluid effects, dynamic cloth simulation, fur and hair simulation, and of course all the basic 3D tools such as a primitives and polygons. Maya can use a few different file formats, and the default is .mb. Maya has also been used to create digital art/paintings. An example of a well known artist that uses Maya is Ray Caesar.

|

| Maya 2017 |

Autodesk 3D Studio Max - 3D Studio Max is a more professional tool than Maya. It is used for creating 3D models and animations for games and images, however it is used for films, TV ads and architecture also. 3DS Max is able to use plugins, which are essentially separate features that can be added to the main program. It includes features such as ambient occlusion, subsurface scattering, dynamic simulation, particles, radiosity, global illumination and more. 3DS Max also includes polygon modelling and NURBS modelling like Maya.

|

| 3DS Max |

Constraints

When using 3D modelling software to create models are games, you may run into restrictions along the way. If you're creating a mobile game, you will be limited in terms of the overall quality of the game. The polygon count on your models will have to be much much lower than a game that would run on PC and console, because models with a high polygon count take up a lot of space. This is the same for textures, and the overall detail and effects in the game. Places like the Apple App Store have a size limit anyway, so if you were developing a game or an app for Apple phones, it would have to be 4GB or lower. You have to take technical limitations into account on any platform.

You may run into restrictions when trying to use the 3D modelling software itself. If you're computer is lower end and or below the recommended specs for the program you are trying to run, it could be much harder to use or impossible to use. You would also need to make sure that you have plenty of space to store all the assets etc, because big games take up a lot of space. Modern games can take up to 60gb of space, and some even higher. So before a game has been put together and optimized, the individual assets, environments etc could take up much more, especially if you have lots of ideas and make lots of variations.

No comments:

Post a Comment