Most game development starts in a game engine. The game engine is the backbone of the game, it's what a game is built on, and it's how the developers add things to a game and tweak with different things. Game engines include things such as:

- Physics

- Collision

- Environments

- Models

- Sounds

- Artificial Intelligence (AI)

- Animation

- & more

However, there are different engines for different things, and not everything is done in the same engine. An example of something that could be created in another engine is the physics. The physics of a game includes things like collision, and the way things move. For example, how a car handles in a racing game, and how the car acts when it crashes into something. A popular physics engine is the Havok engine, which has been used in many games.

Below I will talk about different game engines, and how they have evolved over time. Firstly, there are 2 different types of game engine, open and closed. An open engine is an engine that everyone has access to e.g. the Unreal Engine, which can be downloaded for free on PC. A closed engine is an engine that is only available to the developers that created it e.g. the RAGE engine (Rockstar Advanced Game Engine.)

Engine 1 - id Tech 1-6

The id Tech engine is quite well known, because it was used to power DOOM and Quake, 2 very popular and influential FPS games. The id Tech engine is a closed engine, and only id Software have access to the full thing, however source code was released for certain games to allow players to create their own maps and mods.

id Tech 1 (DOOM Engine, 1993)

The id Tech engine is famous for powering the original DOOM, a hugely influential first person shooter game that released back in 1993. The engine has continued to be used over the years, and 6 versions have been created. The original version of the id Tech engine, was actually just called the "Doom Engine," because at the time that was the only game that used it. As you can see from the image above, the Doom engine is very dated by today's standards, however, at the time it was revolutionary.

The game appears to be a first person shooter with 3D environments, however due to technical limitations at the time, the game levels are actually 2D. The characters line of sight needed to be parallel to the floor, the walls had to be perpendicular to the walls, and it wasn't possible to create bigger, multi-level structures or sloped structures.

The source code for the engine was released to the public, so that people could create their own levels and mods etc.

The levels used binary space partitioning...

The source code for the engine was released to the public, so that people could create their own levels and mods etc.

The levels used binary space partitioning...

id Tech 2 (Quake I & 2 Engine, 1996, 1997)

The id Tech 2 engine had 2 releases, however both are considered completely separate engines, and only the second one is known as the id Tech 2 engine.

| Quake I |

Here is a screenshot of Quake II, that runs on the final version of the id Tech 2 engine. As you can see, the quality of textures and the models is much nicer than the original Quake engine.

|

| Quake II |

The Quake II engine came with out of the box support for OpenGL/hardware accelerated graphics. The engine also featured subdivision of components, which were put into dynamic link libraries. This meant that id Software could release enough source code to allow fan made mods, but keep the rest of the engine proprietary.

The Quake II engine still used binary space partitioning, and lightmaps were used for the lighting in the levels.

id Tech 3 (Quake III Arena, 1999)

| Screenshot of Quake III Arena. |

The id Tech 3 engine was used to power id software's game Quake III Arena. The id Tech 3 engine was based on the previous version id Tech 2, however this time, more of the code was rewritten. id Tech 3 required an OpenGL graphics accelerator to run.

id Tech 3's graphics in general are based around a shader system. This shader system stores the the appearance of surfaces in text files known as "shader scripts." The shaders are rendered in several layers. Each of the layers contains a texture, blend mode and texture orientation modes.

The in-game videos use a format known as "RoQ." RoQ uses vector quantization to encode video and DPCM to encode audio.

id Tech 3's models are loaded in a format called "MD3." Instead of using skeletal animation, MD3 uses vertex movements. Compared to the animation in id Tech 2, id Tech 3's is much better because the animator is able to have more than 10 key frames per second. This means that more detailed animations can be made, and they will be more fluid and less shaky. Each model is split up into 3 separate parts, head, torso, legs. This is so each part can be animated differently. The legs could be running while the head and torso use different animations.

id Tech 3 features different types of shadows. One of them is the common "blob shadow" which just places a black fading circle under the player. The two other types are able to cast an accurate shadow of the player model.

The engine uses a "snapshot" system which send information about the game frames to the client. The object interaction on the server is updated at a rate independent of the rate of the clients, and sends the information about the state of the objects at that point in time to all the clients.

Quake III Arena is an online game, so of course it has an anti-cheat system. The anti-cheat is called "pure server," and is automatically enabled for everyone that joins the server. Pure mode checks the files of everyone that joins, and if someones data pack fails one of the checks, they will be removed from the server.

id Tech 4 (DOOM 3 Engine, 2004)

| Screenshot of DOOM 3. |

id Tech 4's models use skeletal animation, however this proved to be quite CPU intensive, and therefore id had to optimise it.

id Tech 4 was created to be used in DOOM 3, which is a game that takes place in primarily dark environments. Because of this, the engine couldn't really handle large outdoor daytime areas. Something known as MegaTexture rendering technology was used, which actually made id Tech 4 into the best engine for handling large outdoor areas. MegaTexture allowed detailed environments, which included things like dynamic sounds. For example, the walking sound would change depending on what surface the player was walking on.

For scripting, a language similar to C++ was used. Scripting is used for mod creation and for controlling the enemies, weapons and map events in DOOM 3.

id Tech 5 (2011)

| Screenshot of RAGE. |

id Tech 5 came out a long time after id Tech 4, and is a far superior version of the engine. The engine was first used in id's game RAGE, which released in 2011.

When the engine was first shown, it featured 20GB of texture data. Which meant is could support much higher texture resolutions, and a more dynamic world. The engine was also able to automatically put the textures into the memory as needed, so the developers no longer had to worry about texture limits/restraints. Which meant developing for several different platforms was easier. Many more features were also available in id Tech 5, such as softer shadows and particles, and effects like depth of field, motion blur, and post processing.

id Tech 6 (2016)

| DOOM 2016 |

The engine uses dynamic lighting and virtual textures (known as MegaTexture in 4 and 5) but in id Tech 6 they are much higher quality and are able to use realtime lighting and shadows. Many effects are used such as, motion blur, bokeh depth of field, HDR, bloom, shadow mapping, screen space reflections, directional occlusion and FXAA, SMAA, and TSSAA anti-aliasing.

Engine 2 - Source Engine

The Source engine is also quite well known because it has been used for Valves extremely popular games, such as Half Life and Portal. The Source engine is open and a SDK is available. Games such as Garry's Mod were made using the source engine. (GMod was developed by Facepunch Studios.)

The Source engine is the successor of Valves original engine, Gold Source aka GoldSrc. Unlike the id Tech engine, the source engine isn't updated with different numbered versions, it is just updated.

Source 2006 (Half-Life 2)

Source 2006 is the term used for the branch of the source engine that included technology used in Valves Half-Life 2: Episode 1. This engine included new effects like high dynamic range rendering and colour correction. These features were showcased in Valved tech demo-like game called Half Life 2: Lost Coast. Other smaller features like Phong shading were introduced in HL2: Ep 1.

Source 2007 (The Orange Box)

The 2007 Source engine was made for Valves new release The Orange Box, which contained Team Fortress 2, Half Life 2, Ep 1 and 2, and their new game Portal. A new threaded particle system replaced the hard-coded effects for all the games in The Orange Box. To support this, Valve created an in-process tools framework, which also supported the initial builds of Source Filmmaker, Valve's free tool that people can use to create animations using Source assets. The facial animation system was updated for "feature film and TV quality" by making it hardware-accelerated.

Since The Orange Box was released on multiple platforms, the code was refactored, and the source engine was able to take advantage of multi-core CPU's. However, until the release of Left 4 Dead 2, the performance on PC was unstable. At a later date, Valve backported the multi-core CPU support to Team Fortress 2 and Day of Defeat: Source.

The Xbox 360 version of The Orange Box was developed by Valve in house, and the Playstation 3 version was developed by Electronic Arts. The engine supported the Xbox 360 quite well, and the support for the console was built into the engines code. The PS3 version by EA had many issues, however.

Left 4 Dead Source

The next version of the Source engine is simply known as the Left 4 Dead branch. During the development of Left 4 Dead, the Source engine was completely overhauled. More features such as split screen multiplayer, more post-processing effects, event scripting and a dynamic AI director, due to further support for multi-core CPU's. This branch of the Source engine would continue to be used and updated for Alien Swarm and Portal 2.

Source 2 Engine

Source 2 is Valves newest engine, and is yet to make it's way into more games. Currently, the only game to use the Source 2 engine is Dota 2. Source 2 features a rendering path for Vulkan API, and it will use Valves in house physics engine Rubikon.

| Dota 2 running on the Source 2 engine. |

Engine 3 - Unity

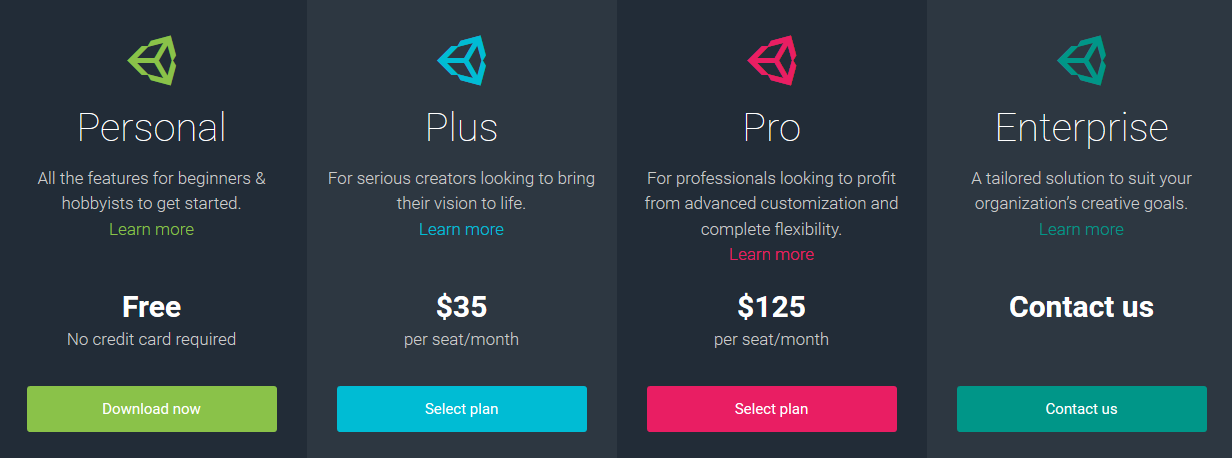

The Unity engine is very popular, since it can be used on several different platforms. It is used to develop games for the PC, consoles, websites, and mobile phones. The engine is open, and a free version and paid versions are available.

The Unity engine uses different API's for different platforms, on Windows and Xbox it uses Direct3D, on Mac, Linux and again Windows, OpenGL is used. On phones (Android and iOS,) OpenGL ES is used. Unity can also be used on web pages. For all the different platforms, Unity features texture compression and resolution settings. There are many different effects and technical features that Unity includes such as, bump, reflection and parallax mapping, SSAO (screen space ambient occlusion.) The engine is very diverse and there are lots of options for each platform that it supports. Because of the engines diversity, it is popular among developers who develop games for multiple platforms.

Instead of using there own development kit, Nintendo use Unity, and the engine is bundled with Wii U development kits.

Engine features and comparisons

Rendering

Rendering is the process of using a scene/scene file to generate a 2D or 3D image. A scene file contains all the objects and scene information in a language or data structure, and things such as the viewpoint, texture, lighting and shading are stored in it. The scene file is then put into a rendering program, and a full 3D or 2D image is produced. There is something known as the "graphics pipeline," which outlines the challenges to overcome when producing a 2D image from a 3D representation along a rendering device, e.g. the GPU (graphics processing unit.) The term "rendering" is also used in video editing and is when the effects are calculated in a video editing program to produce the final video.

The Unity engine uses a rendering Pipeline.

Forward Rendering Path - The ForwardBase pass is responsible for rendering ambient light, lightmaps, main directional light and not important lights at once. It is used for any additive per-pixel lights.

Deferred Shading Path - Deferred pass renders everything that is needed for the lighting. Built in shaders, diffuse colour, specular colour, smoothness, world space normal, smission. It also adds lightmaps, reflection probes and ambient lighting.

Legacy Deferred Lighting path - PrepassBase pass renders normals & specular. PrepassFinal combines textures to render final colours, and renders lighting and emissive material properties.

Legacy Vertex Lit Rendering Path - Vertex lighting is often used on platforms that do not support programmable shaders, so multiple passes for lightmapped and non-lightmapped objects have to be written explicitly.

- Vertex pass is used for non-lightmapped objects. They are rendered at once using OpenGL/Direct3D.

- VertexLMRGBM pass is used for lightmapped objects when the lightmaps are RGBM encoded (PC and console.) No realtime lighting is used.

- VertexLMM pass is used for lightmapped objects on mobile platforms when they are double-LDR encoded. No realitime lighting is used and textures are combined with lightmap.

Source: https://docs.unity3d.com/Manual/SL-RenderPipeline.html

Artificial Intelligence

Artificial intelligence is used in video games for NPC's (non-player characters.) These are characters in the game that are controlled artificially/by the computer, and the goal is to have them act as human as possible. Since these characters are being artificially controlled, it is possible that they can be way too good and make the game unfair. In first person shooter games like Counter Strike, the skill of the AI needs to be toned down, otherwise their accuracy will be perfect and beyond what is achievable by normal humans.

AI is handled differently in different engines, for example, Unreal Engine 4 uses something known as "behaviour trees." Behaviour trees contain different actions that branch off to more actions, and they all connect together. I will put a screenshot below. Blackboards are also used, which is the AI's memory. It stores all the values that the behaviour tree needs to use.

A simpler engine like GameMaker uses drag and drop. When you go into the properties of an object, you are presented with a menu with lots of options and categories on the right side. You simply drag and drop the options you want into the events column on the left. You can edit the values etc. GameMaker also allows users to use code, however since GameMaker is good beginner tool for game developers, drag and drop is quite popular.

Middleware

Middleware is software that provides additional features and services to applications, and is often called "software glue." In gaming, middleware is used in game engines to handle certain technical aspects of the game. An example of a popular type of middleware is the Havok physics engine, which is an engine that specialises in handling physics (collision, rag-dolls, how objects move and react etc.) Havok has been used in over 600 video games, and is also used in software like Maya.

Another middleware program has started to become increasingly popular over the years is Nvidia GameWorks, which includes some really nice effects. GameWorks is made up of several components.

VisualFX - This handles the rendering of water, fire, smoke, depth of field, FaceWorks, HairWorks, HBAO+ (ambient occlusion) and TXAA (temporal anti-aliasing.)

PhysX - For physics and detailed particles. Below is a screenshot of Borderlands 2 which uses PhysX for high tech, detailed particles. PhysX is also used for fluids.

OptiX - For lighting and rays.

CoreSDK - Allows better integration for Nvidia features in games.

No comments:

Post a Comment